Funnel discipline: Measures of success and tracking progress in closing the gaps

Douglas K. Smith, Quentin Hope, Tim Griggs, Knight-Lenfest Newsroom Initiative,This is an excerpt from “Table Stakes: A Manual for Getting in the Game of News,” published Nov. 14, 2017. Read more excerpts here.

Funnels are their own scorecards! By setting, pursuing and monitoring performance against the specific goals for each stage of the funnel, you and your colleagues can track progress, learn what works and doesn’t work, and iteration-by-iteration close your key gaps.

Performance is the primary objective of change, not change. Consider, for example, Miami’s efforts to build newsroom skills. They set goals requiring all reporters to increase traffic by 7.5% – then provided the training and support to increase the odds that reporters succeeded. Dallas also made performance results (10% traffic increases plus gains in a specially designed engagement index) the primary object of skills training.

The same principle applies to funnels – and continually improving results that funnels produce. Don’t just say, “Use a funnel.” Instead, set and update specific goals at each stage of any particular funnel – and expect folks to learn what works versus what doesn’t work through attempting to succeed at those goals.

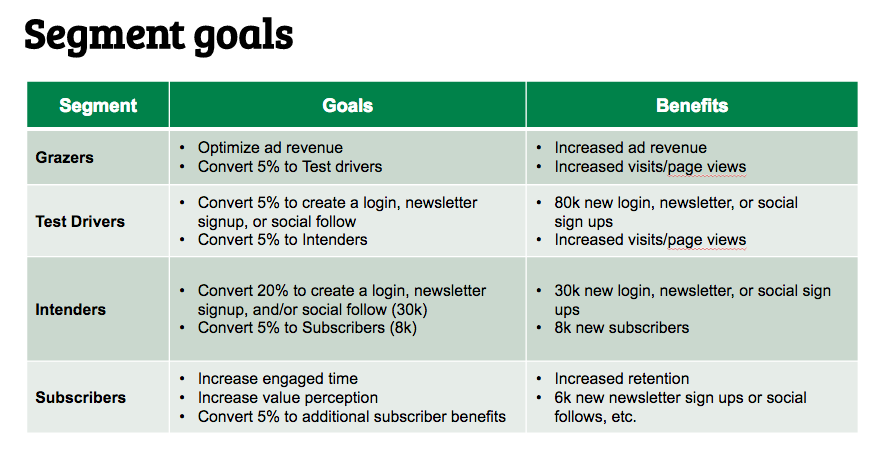

Here, for example, are the initial goals – and benefits of those goals – that Minneapolis set for the funnel used to convert different audience segments into digital subscribers:

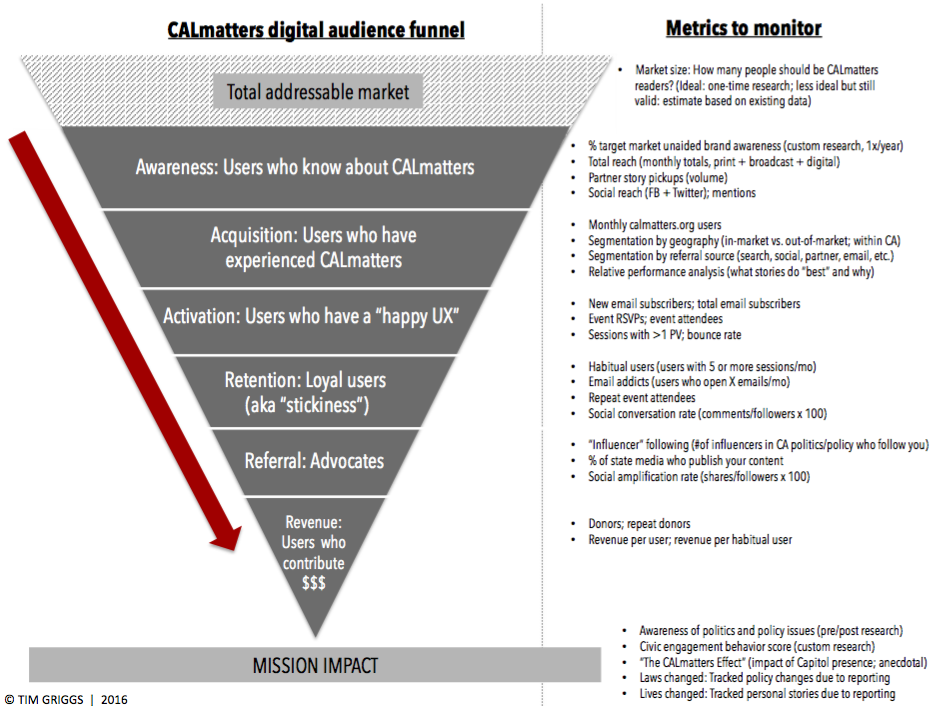

For illustrative purposes, here’s an example of a funnel that serves as a scorecard, from the nonprofit news organization CALmatters:

Through setting goals for each stage of the funnel and monitoring results, you and your colleagues will discover what steps work best for the audiences you seek to serve and the revenue and financial goals you wish to achieve. Folks at the Texas Tribune used continuous testing-and-learning, for example, to discover a strong link between event attendance and membership. When they looked at any one event in isolation, they observed only a single-digit percent of attendees became paying members. As an isolated data point, that might have caused them to conclude event attendees were not likely to join, or that there was an execution problem at converting event attendees to members. However when they looked closer at the data, they saw that when the same folks attended at least three events, the propensity to become members rose nearly ten-fold. This, in turn, led to a shift in tactics: Instead of a primary objective to convert one-time event attendees into members, the Texas Tribune folks instead worked hard to get those folks to attend a second – then a third – event.